Research

Click on the image to see a description of the project!

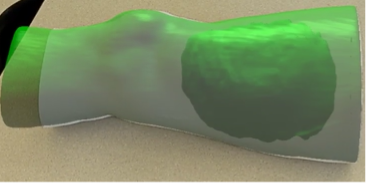

Radiation Visualization using Augmented Reality

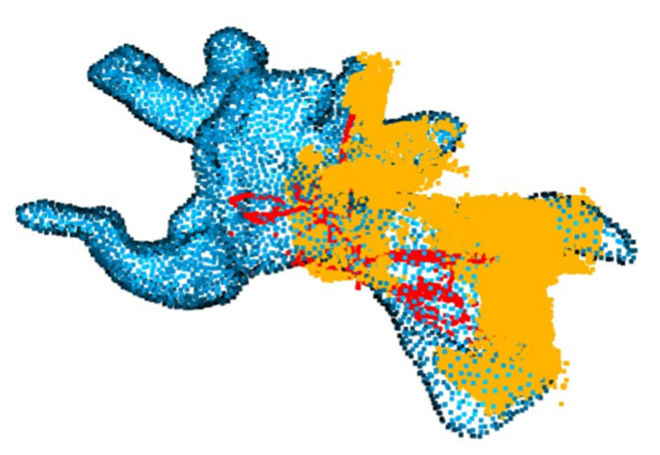

Sensorless Force Estimation on da Vinci Research Kit

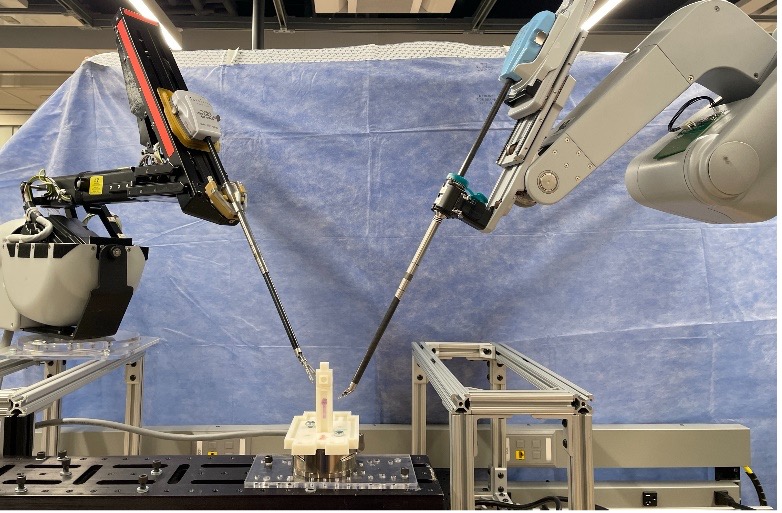

Robust Eye Tracking for da Vinci Surgical System

Surgical Training and Skill Assessment through Augmented Reality

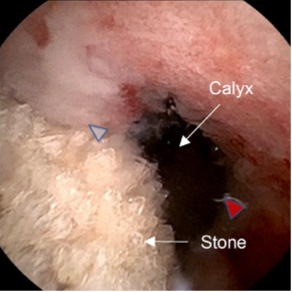

Vision based Navigation in Endoscopic Kidney Surgeries